3Dm™: Advanced Data Management for Large-Scale 3D Tissue Imaging

Unlock the power of automated registration, alignment, and flat field correction for your large 3D imaging datasets.

3Dm™ pipeline is engineered for the automated processing of large and ultra-large datasets, ensuring seamless integration and analysis.

Automate 3D image alignment, stitching, and correction with unmatched speed and precision.

Automated 3D Stitching & Registration

Precise Alignment

Automatically stitch images post-imaging, delivering high accuracy and efficiency across large datasets.

Discover more

Reduced Manual Intervention

Minimizes the need for manual adjustments, saving time and resources while ensuring consistent results.

Enhanced Data Integrity

Maintains the integrity of your data through automated, standardized processes, reducing the risk of errors.

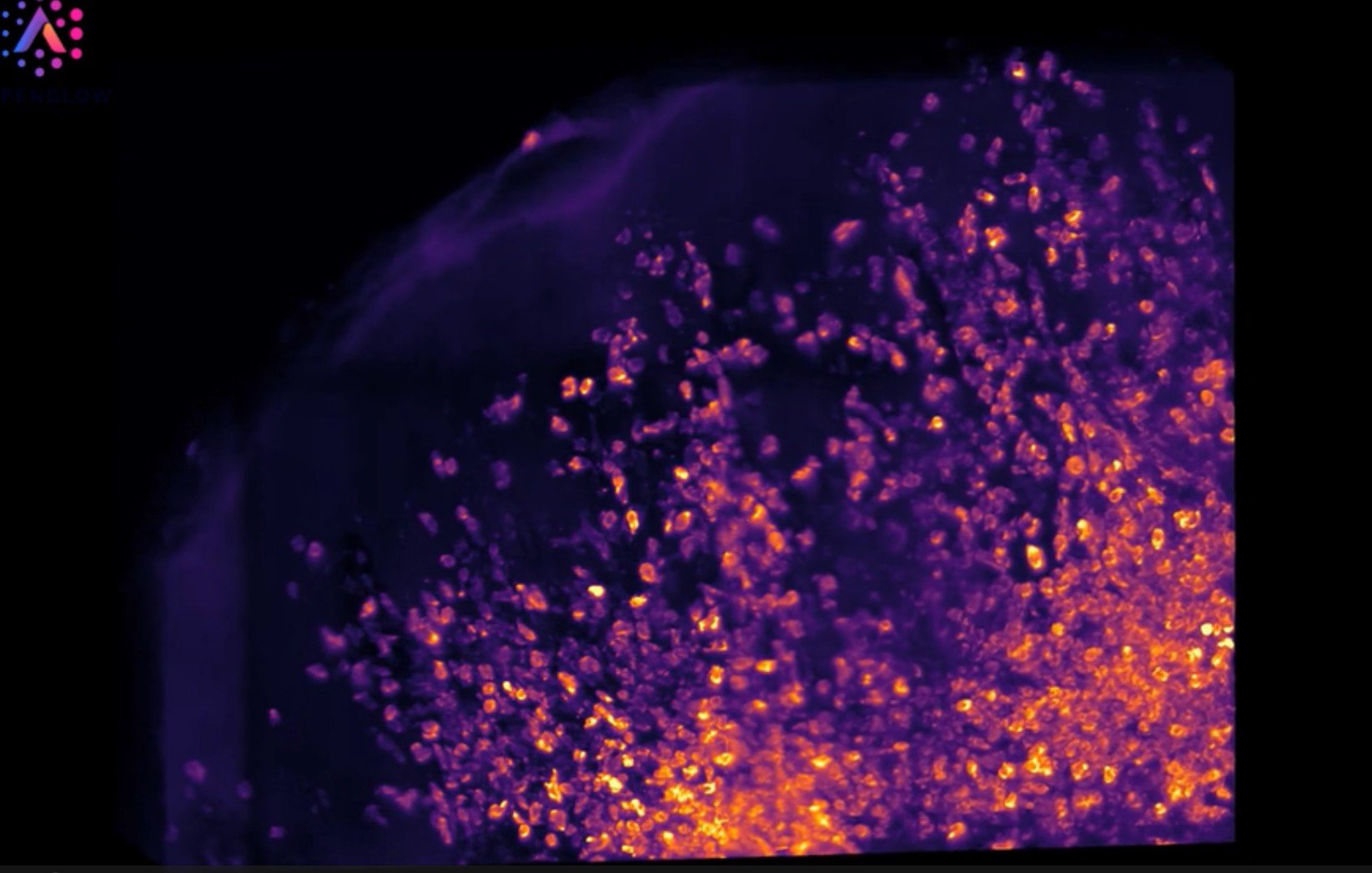

Flat‑Field Correction for 3D Tissue Imaging

Uniform Illumination

Corrects for uneven illumination across the imaging field, providing consistent brightness and contrast.

Discover more

Artifact Removal

Eliminates artifacts and distortions, resulting in clearer and more accurate images for analysis.

Improved Analysis

Enhances the quality of data, making it easier to identify and quantify features of interest in your samples.

Destriping

Stripe artifacts from uneven illumination or shadows are automatically detected and smoothed to produce cleaner 3D images.

Deconvolved, Multi-Channel Fluorescence Integration

Integrated Channels

Deconvolved and integrated multiple fluorescence channels effortlessly, creating a comprehensive dataset for seamless analysis.

Discover more

Accurate Co-Localization

Ensures precise co-localization of markers, providing valuable insights into cellular interactions.

Streamlined Workflow

Simplifies the process of combining and analyzing data from different channels, improving efficiency.

Ultra-Large Dataset Processing Capabilities

Scalable Processing

Handles datasets of any size, from gigabytes to terabytes, using a custom, fully automated pipeline to ensure efficient processing and analysis.

High-Throughput

Optimized for high-throughput workflows with GPU-accelerated performance, enabling rapid processing of large numbers of samples.

Resource Optimization

Utilizes advanced algorithms to balance workload distribution, reduce computational overhead, and ensure consistent performance even under demanding conditions.

Applications & Success Stories

-

Accelerate drug development with enhanced image analysis and data management.

-

Enhance clinical research with seamless integration of multi-channel fluorescence data.

-

Discover novel biomarkers with ultra-large dataset processing capabilities.